Object detection, five years later.

(status: as of 6/20, project still in progress)

Motivation

5 years ago I wanted a tool to track my barpath when weightlifting, so I could fix my shoddy technique. This tech was available, but expensive and mostly used by coaches - I wanted something that was trivial to use, where you fed in a video of the lift from roughly side on, with no special motion capture tech. To do this, I used transfer learning, starting from the Faster-RCNN-Inception-V2-COCO general image model from Tensorflow, and providing about 50 images of me doing olympic lifting. Then with OpenCV, we overlaid the bounding box, and tracked the centroid throughout the frames of a video. At the time, this felt like magic.

![]()

(Fig 1: me at cambridge uni gym doing dip snatches. The green line tracks the bar from where it starts around hip height, then it’s launched upwards and caught overhead in a squat.)

The end product (found here) did the job, but it was a tedious slog, spent fixing incompatible Python dependencies, understanding poor documentation, and lifting other people’s half-working code. And the end product was difficult to use - it needed a local build from source, and there was no way to use it on mobile. This meant it never got used for its stated purpose. In 2024, with ML now a thoroughly professionalised endeavour, millions of engineer hours spent making ML development smoother, vast open source models easily available, and with modern LLMs on our side, how much easier is the problem? Let’s find out!

Results, part one: multimodal LLMs

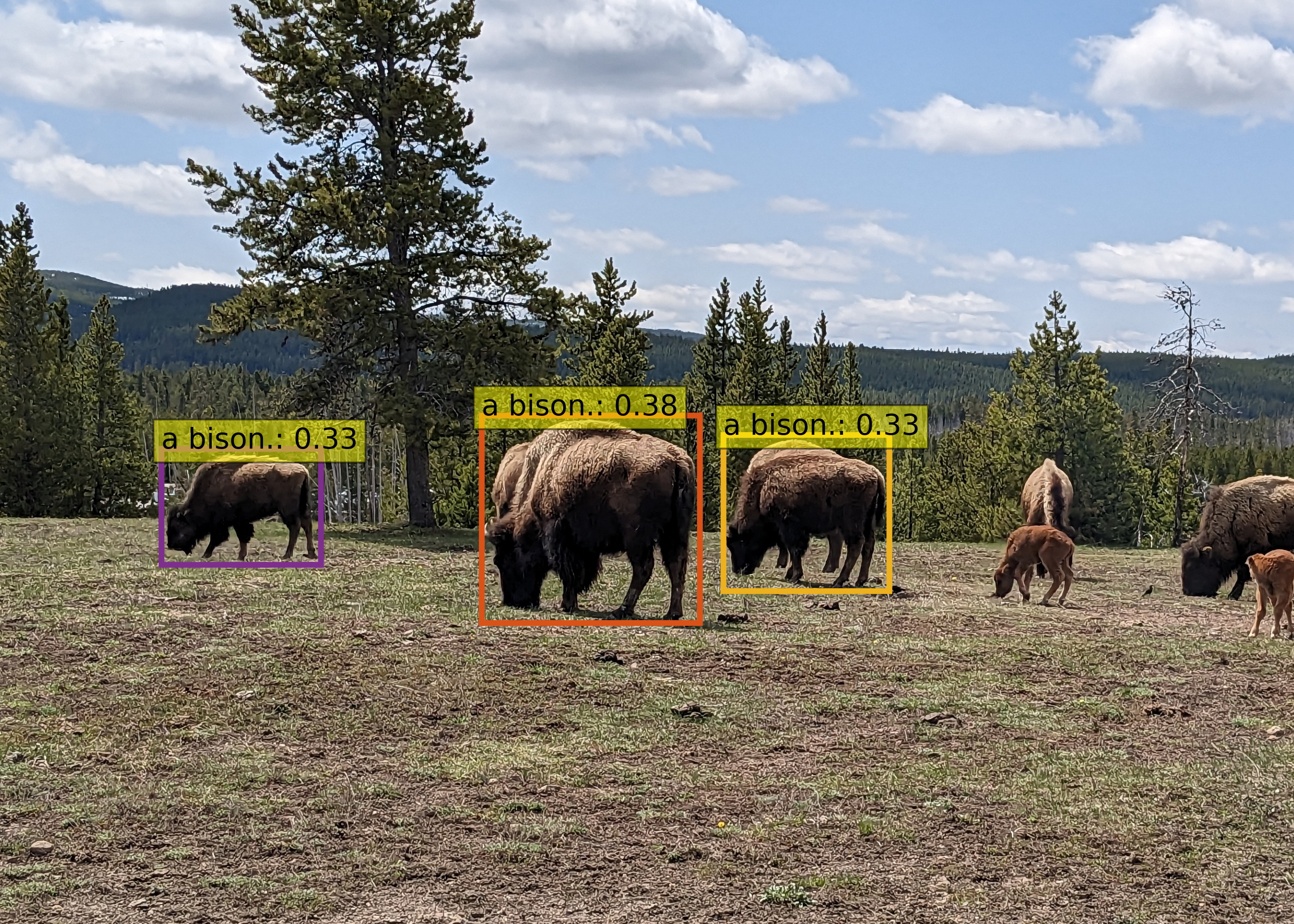

It turns out that faster-RCNN and YOLO are still SotA for the object detection problem, and OpenCV is still the way to annotate images - it’s just somewhat nicer to use now that LLMs can give you the syntax. However, for the sake of trying something new, we’ll use a multimodal LLM - specifically, Grounding DINO. We could also use PaliGemma, Google’s VLM, but that explicitly asks for finetuning. In theory Meta’s Segment Anything Model has the capacity to do what we need, but while that capacity is in the paper it has not yet been released. These models take the form “Given an image, and a text prompt, draw bounding boxes on all objects in the image matching the prompt.” (see below). This is as opposed to more traditional methods where we start from a model that understands generally how to do object detection, and use transfer learning to specify which types of objects we’re trying to detect.

(Fig 2: Grounding DINO with the prompt ‘a bison.’)

(Fig 3: Grounding DINO with the prompt ‘a baby bison.’)

For our use-case, all we need are bounding boxes (as opposed to segmentation masks) so in theory this solves our problem perfectly, without the tedium of labelling images ourselves. However, it has two key disadvantages:

- The models involved are huge, and slow to run (understandably, given the model has to understand natural language in addition to its object detection task).

- The bounding boxes are very sensitive to the prompt used. Time saved by not labelling images is instead spent prompt engineering (in this case, after many variations on ‘barbell plate’, the simple ‘a round weight.’ was chosen, as anything more specific included the barbell and plates as a single object.)

By following the HF example code, and leaning heavily on Claude and 4o, it was the work of an evening to obtain a working object detector. Even the tiny version of the DINO model is too slow to run the object detector on each frame, so we just run the detector on the first video frame, and use OpenCV’s TrackerCSRT to track our bounding box through the image. By plotting the centroid over time, we have an MVP! Code is here. NB: on WSL, this needed sudo apt-get install libqt5x11extras5 libxcb-randr0-dev libxcb-xtest0-dev libxcb-xinerama0-dev libxcb-shape0-dev libxcb-xkb-dev to run, for mysterious OpenCV reasons.

![]()

(Fig 4: v1 of the barbell tracker. Note that the bounding box has drifted off the plate. The tracker has here revealed a technique error, specifcally jumping back in the pull and then falling forwards in the squat.)

Now we have something faintly functional, we can spot some issues:

- It’s still kinda slow (45s to annotate an eight second clip).

- The tracking algorithm doesn’t faithfully follow the original bounding box.

- (nit) Our tracker annotates the bar all the way up and all the way down, which makes analysis harder.

Results, part two: Improvements

Why is this so slow? Profiling (with Scalene) reveals our 45s annotation time was split between ~20s to detect the plate in the inital frame, and ~20s to track the resulting bounding box through the image. Options for speeding up part 1 include:

- The trusty YOLO algorithm.

- Smaller, finetuned, multimodal LLMs.

- Classical detection methods.

Options for speeding up part 2 are harder to come by.

Further work

- deploy this model

- explain the tracking algorithm.

- timing our code

- comparison with YOLO v10.